I am super-interested in automated cars. The promise of additional safety on the roads (performance) and time freed up (productivity) is something I am really looking forward to. And as a developer and a team lead the same goes for automated code generation. I’m hoping that automated tools can generate higher quality code (performance) at a higher rate than me and my team could manage otherwise (productivity).

Like cars, automated code writing (just like many things in life) is on a pathway taking us from a completely manual task to a utopian goal of complete automation. Navigating that path and understanding how mature tools are, their benefits and disadvantages, and the changes in human behaviour that they require is quite tricky. However, there is some good news…

Automated driving is a well defined topic. Given that 70% of adults in the UK drive – no doubt it’s more in countries like the USA – it’s something I found easy to relate when thinking about how to judge the progress of code writing tools. SAE – the international automotive standards body – has defined “levels of autonomous driving” which categorize cars with (often AI-powered) driving aids using some fairly discrete criteria. I wondered if similar ‘levels’ of coding automation could give us a better understanding of the tools we might use as software developers.

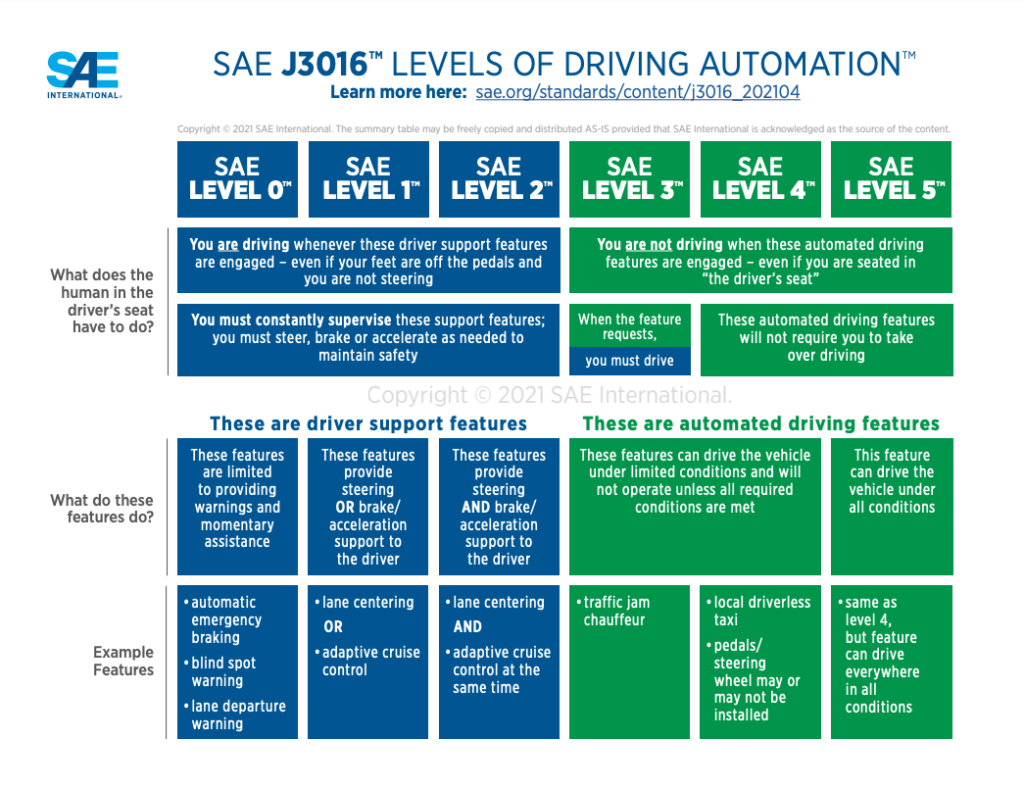

Levels of Driving Automation

The graphic above shows the SAE Levels of Driving Automation. It’s clear that the most important step is between level 2 and level 3. Why? Because at level 2 the human is still responsible for the entire car, though some tasks have been handed over to automation: “you are driving” the car. This is like Ford’s BlueDrive (“Hands off, eyes on”) and Tesla’s Autopilot (however much Elon Musk might want you to believe they’re at level 5 already!).

From level 3 the automation is responsible for the entire car but can sometimes hand control back to the driver for additional input. In a car with features that put it at level 3 or above, “you are not driving”.

(As a side note, when it comes to automated driving some argue that the challenge of moving the classification of your car from level 2 to level 3 is actually more about legal liability than technological capability. But we’ll put that to one side for now and just think about the concept.)

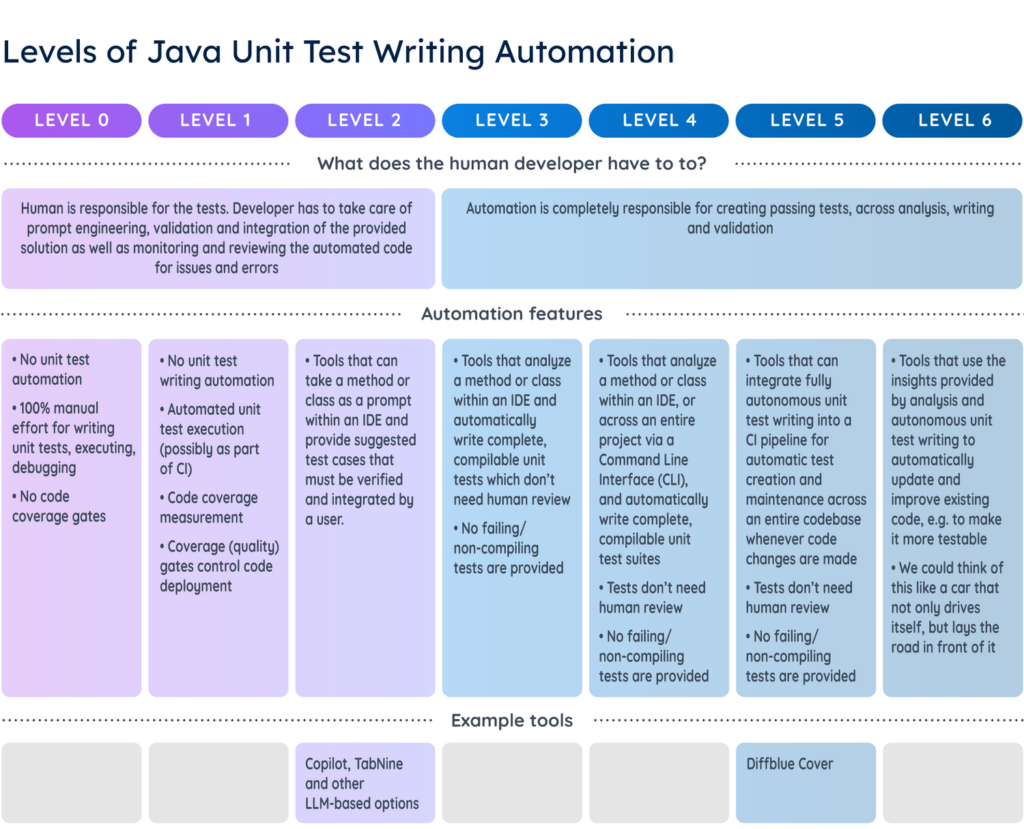

A framework for automated Java code writing

For cars this seems to provide a pretty sensible, standardized way to judge how automated they are, what kind of capabilities we should, and how much responsibility we ‘users’ should expect to take in each case.

Given the proliferation of new AI-powered software development tools, many of which say they can write at least some code for us, the SAE structure got me thinking about whether we could apply the same idea to automated code writing. Perhaps something similar could help development teams like the one I work in better understand how to think about some of the new tools, and what we should expect from them.

At Diffblue our product writes Java unit tests, so that was the framework that came to mind first. Let’s take a look at what Levels of Java Unit Test Writing Automation might look like.

Levels 0

No unit test automation; 100% manual effort for writing unit tests, executing, debugging. No code coverage gates.

Level 1

No unit test writing automation. Automated unit test execution (possibly as part of CI), plus code coverage measurement. Coverage (quality) gates control code deployment.

Level 2

Tools that can take a method or class as a prompt within an IDE and provide suggested test cases that must be verified and integrated by a user. Examples include tools like GitHub Copilot, TabNine and other LLM-based options.

Level 3

Tools that analyze a method or class within an IDE and automatically write complete, compilable unit tests which don’t need human review. No failing/non-compiling tests are provided.

Level 4

Tools that analyze a method or class within an IDE, or across an entire project via a Command Line Interface (CLI), and automatically write complete, compilable unit test suites which don’t need human review. No failing/non-compiling tests are provided.

Level 5

Tools that can integrate fully autonomous unit test writing into a CI pipeline for automatic test creation and maintenance across an entire codebase whenever code changes are made. Tests don’t need human review. No failing/non-compiling tests are provided. Diffblue Cover currently sits here.

Level 6

Tools that use the insights provided by analysis and autonomous unit test writing to automatically update and improve existing code, e.g. to make it more testable. We could think of this like a car that not only drives itself, but lays the road in front of it and upgrades the engine while driving.

Like autonomous vehicles the most important line in this framework is between level 2 and level 3.

At level 2, the human is still responsible for the tests. While some tasks have been handed over to automation, for each test the prompt engineering, validation and integration of the provided solution can easily still take up a large amount of time. Monitoring and reviewing the automated code for issues and errors is also something humans find particularly tricky, which may impact the quality of the tests. Level 2 tools often still require the involvement of senior developers who have the technical expertise to properly understand the potentially complex code under test.

At level 3+ the automation is completely responsible for the analysis, writing and validation involved in creating passing unit tests. Though it may still not be able to generate 100% test coverage an expert developer isn’t needed to monitor the tests it does create, freeing them to spend more time on implementing the next feature.

Summary

As we as a development community navigate the path to autonomous code writing, there will be frustrations and setbacks as we attempt to employ automation to improve the performance and productivity of our teams.

These frustrations often stem from human nature to get hyped about the potential for these new technologies: we tend to inflate our expectations without a real guide to the state of play of the technology and what expectations to have when assessing its utility.

If you’re trying the latest AI-powered code creation tools like Copilot or Cover, take a moment to consider where they fit in the Levels of Unit Test Writing Automation. Try to keep in mind where the boundaries and responsibilities lie between human and machine, and the changes in behaviour needed to support the technologies when assessing the overall costs and benefits.

If you’d like to learn more about the differences between Diffblue Cover and Github Copilot that help to put Cover into Level 5, check out this blog. Or you can try Cover for free today to see how fully autonomous unit test writing works in Java applications.